Social Media Giants Fight Against Fake News: Moving Forward or Stagnant?

The topic of fake news has brought attention to the actions (or lack thereof) of social media giants. Let's explore whether they are making progress or remaining stagnant.

In recent years, social media platforms have taken steps to combat the spread of disinformation and fake news on their sites. Combatting the spread of false information is an ongoing battle, with the sheer volume posing significant challenges. Despite their efforts, criticism for inadequate or inappropriate actions is frequently directed toward them.

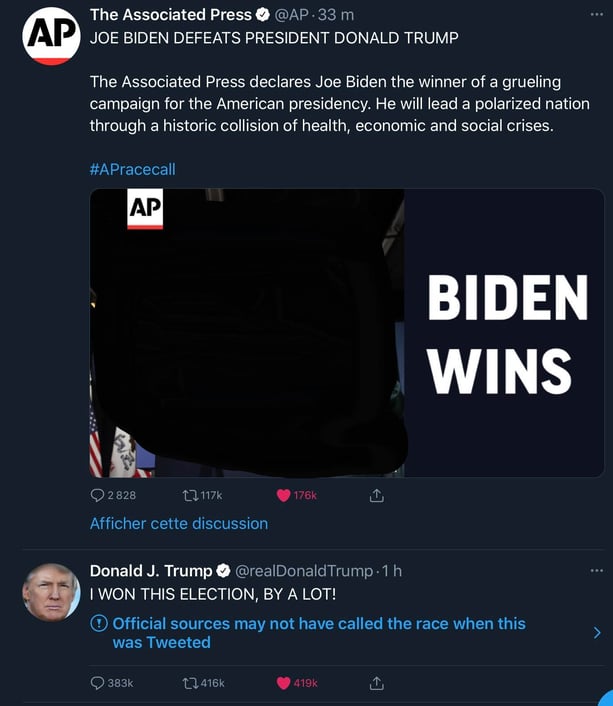

Moderating or even deleting controversial content and the accounts of its authors on social media is a complex challenge and a prerogative that many believe should not be the sole responsibility of the companies that manage these platforms, as shown by the reports of tweets from Donald Trump's account by Twitter Inc. in November 2020 and the subsequent suspension of Trump's profile by January 2021.

1. Twitter and Fake News

2. Alphabet and Fake News

3. Meta Group and Fake News

4. TikTok and Fake News

5. International Institutions and Fake News

6. Is Quelling Fake News a Real Interest for Social Networks?

With the release of our new guide "Fake News : How to Detect and Combat Disinformation on Social Media", we took a look at the different initiatives social networks are taking to manage fake news, for Facebook, WhatsApp, YouTube, Twitter, Google, and TikTok.

Alongside initiatives by media, journalists, and associations, social network publishers have been working for several years on improving their content algorithms, setting up election war rooms, developing alert functions, and employing moderators to combat fake news and disinformation.

Twitter, for example, regularly cleans up accounts that create or spread false news.

Facebook is thinking of rating its users according to the information they report or of displaying banners on questionable information.

Since 2017, these same platforms have initiated partnerships with certain journalists to work on fact-checking. However, since 2018, contributing journalists have shared their disappointment in the frequent criticism of the means used and the state of mind of certain social networks in this fight.

It should be noted that the social network giants now work on a case-by-case basis on specific sectors and sensitive areas particularly affected by fake news.

An example of a platform taking action to combat the spread of false information about vaccines is Facebook. In 2019, prior to the COVID-19 pandemic, Facebook implemented measures such as banning misleading vaccination advertisements and reducing the visibility of groups and pages that disseminate this type of information in search results and the "newsfeed."

The year 2020 saw a surge in social networking efforts, propelled by significant events like the US presidential election and the worldwide health emergency. Regrettably, this also resulted in the dissemination of false information, particularly concerning treatments, statistics, and vaccinations.

Twitter and Fake News

Twitter said it labeled 300,000 misleading posts about the US election between 27 October and 11 November 2020. Out of the 300,000 tweets that were reported, only 456 of them have been prohibited from being shared, commented on, or liked on the platform. Almost half of the tweets by Donald Trump were reported by the social network in the days following the election: the president claimed, without any evidence, that he had won the election and that there had been massive fraud.

More generally, Twitter intends to limit interactions with "dubious" information, such as retweets, quotes, and likes. Once the deceptive label is affixed to tweets, their chances of going viral will inevitably diminish. Users will no longer be able to retweet or respond to them without appending a comment. Furthermore, Twitter's algorithms will put a stop to their promotion, which means they won't appear in users' primary timelines.

Twitter reported a tweet from Trump on Nov 7, 2020, before official election results were announced.

In addition, Twitter already has a collective or crowdsourced fact-checking moderation tool, Birdwatch, to counter viral disinformation: verified Birdwatch contributors will then be possible to add a tweet that is considered problematic to a "watch list" and to attach a "note" to it. This note will be available to everyone to add context to the publication.

Birdwatch's primary aim is to provide an additional level of fact-checking and context to tweets that may not explicitly breach Twitter's policies. It can navigate ambiguous territories to combat misinformation on a variety of subjects beyond politics and science, such as health, sports, entertainment, and other intriguing online phenomena.

TLDR; Birdwatch puts to bed some outrageous theories with added clarification!

Alphabet and Fake News

Google Search Engine

For its part, Google has been working for a number of years to reduce the proportion of fake news that could be present in its search results and on YouTube, in particular through work on 4 axes:

- The revision of its algorithms

- The fight against low-quality sources

- The contextualization of information through recognized sources

- Better training of search quality assessors who work to identify low-quality content

During the fall of 2020, amidst the overwhelming amount of misleading information regarding COVID-19 vaccines, Google implemented information panels in its search results to guide users toward credible sources on the topic of vaccination.

YouTube

All the major social media sites have been affected by fake news. And the most visited in the world, YouTube, is no exception.

According to a study by BMJ Global Health, over 25% of the most watched COVID-19 videos on YouTube in spoken English contain misleading or inaccurate information.

Following the slew of misinformation around COVID-19 about topics such as its origin and home remedies, YouTube launched video fact-checking in the U.S. in August 2020, following other countries who had piloted the initiative the previous year, such as Brazil and India. Fact checks will appear in front of users as information when they search for sensational news, like "What can I use to recover from COVID-19 at home quickly?" Selected media and organizations will also be able to place a warning on a video and restore the truth.

During the fall of 2020, Google's video platform will feature information panels aimed at combating "fake news" about vaccines. Furthermore, YouTube made the decision in December to remove videos containing false claims of massive fraud during the United States presidential election.

Meta Group and Fake News

Facebook also announced in December 2020 that it would be removing false claims about the COVID-19 vaccine that have been disproven by public health experts on Facebook and Instagram.

Getting people to stop and think about a topic before sharing is what Facebook attempted to introduce to its users. Informative friction, or a notion akin to a" speed bump," would provide users with link sources and dates related to the content, giving them more context before pressing the almighty 'Share' button.

WhatsApp

The Facebook misinformation scandal has left us with a sense of skepticism toward forwarded messages on WhatsApp too. We often find ourselves scrutinizing the validity of their claims, much like John Nash in the film "A Beautiful Mind."

WhatsApp implemented a new feature in August 2020 that identifies "viral" messages - those that have been extensively shared - that was not written by a close contact. 'Search the web' is simply a built-in search function that enables users to explore additional information related to the news found in the message via their browser with ease.

On top of that, new limits were set on how many times a forwarded message can be sent at once to maintain the private nature of WhatsApp.

TikTok and Fake News

In December 2020, TikTok announced measures to combat vaccine misinformation in an effort to curb the spread of anti-vaccine misinformation. The social network announced:

- - The creation of a "Vaccine Information Centre", which will offer videos from official institutions such as the WHO or from influencers such as Hugo Decrypts

- The introduction of a "vaccine" tag to detect videos mentioning vaccines. A banner with a link to the information center will be on top of every video from 21 December

- Strengthening its ability to remove false information about vaccines through its moderation work and community guidelines

In October 2020, AFP announced a partnership with TikTok to fight misinformation, initially limited to a few countries in APAC and Oceania. AFP teams are reviewing videos to identify and address any potentially inaccurate or deceptive content in countries including the Philippines, Indonesia, Pakistan, Australia, and New Zealand.

International Institutions and Fake News

The European Commission has taken a strong stance against misinformation, particularly on social media platforms. Their latest guidelines, released in June 2020, call on major social media platforms to provide monthly reports on their efforts to combat fake news. The Commission also stresses the importance of increased collaboration between these platforms and fact-checking organizations.

Beyond social networks, the World Health Organisation (WHO) announced in October that it was collaborating with Wikipedia, with whom it shared the latest information on Covid-19. Since its inception in 2001, Wikipedia has been ranked as one of the most visited, if not the top 10 websites worldwide. Many people rely on it as a trusted source of health-related information.

The aim is to make the latest and most reliable health information available on Wikimedia Commons. This includes infographics, videos, and other public health materials translated into almost 200 languages.

Is Quelling Fake News a Real Interest for Social Networks?

It is quite apparent that the widespread dissemination of false information is having a detrimental effect on multiple platforms.

According to journalists' analysis, on the day of the US election, the primary social media networks such as Twitter, Facebook, and YouTube were inundated with a deluge of false information. It was noted that moderation was almost non-existent in non-English languages. In response, the US Senate has twice summoned the heads of Google, Facebook, and Twitter to explain their responsibility for content moderation, as well as allegations of information manipulation.

Moderation and deletion policies on platforms can be complex, leading to tense debates about who has the power and responsibility for content removal. These discussions often reflect differing and divisive viewpoints. For example, while many internauts and celebrities called for the deletion of Donald Trump's social accounts, when Twitter actually carried out the deletion, other voices, especially political figures, spoke out against the abusive powers of the GAFAs, arguing that the deletion violated freedom of expression and amounted to censorship.

In addition, many observers, including economists, sociologists, and journalists, believe that social networks are not truly committed to combating fake news because doing so would go against their business model. All of these characteristics tend to strongly reinforce the time spent and the attraction (addiction) of the social networks for their users, the holy grail of this attention economy!

Written by Christophe Asselin

Christophe est Senior Insights & Content Specialist @ Onclusive. Fan du web depuis Compuserve, Lycos, Netscape, Yahoo!, Altavista, Ecila et les modems 28k, de l'e-réputation depuis 2007, il aime discuter et écrire sur la veille et le social listening, les internets, les marques, les usages, styles de vie et les bonnes pratiques. Il est expert Onclusive Social (ex Digimind Social)